Towards Embodiment Scaling Laws in Robot Locomotion

CoRL 2025

One Model, Two Worlds, Many Embodiments

TLDR: We uncover embodiment scaling laws: training on diverse robot embodiments enables broad generalization to unseen ones, demonstrated in a locomotion study across ~1,000 robots.

Abstract

Cross-embodiment generalization underpins the vision of building generalist embodied agents for any robot, yet its enabling factors remain poorly understood. We investigate embodiment scaling laws, the hypothesis that increasing the number of training embodiments improves generalization to unseen ones, using robot locomotion as a test bed. We procedurally generate ∼1,000 embodiments with topological, geometric, and joint-level kinematic variations, and train policies on random subsets. We observe positive scaling trends supporting the hypothesis, and find that embodiment scaling enables substantially broader generalization than data scaling on fixed embodiments. Our best policy, trained on the full dataset, transfers zero-shot to novel embodiments in simulation and the real world, including the Unitree Go2 and H1. These results represent a step toward general embodied intelligence, with relevance to adaptive control for configurable robots, morphology co-design, and beyond.

Generating ~1000 Robots

To study the effects of embodiment scaling, we procedurally generate GENBOT-1K dataset consisting if approximately 1,000 varied robot embodiments, including humanoids, quadrupeds, and hexapods, with different geometry, topology, and kinematics.

Variations:

Topology

Geometry

Kinematics

Humanoid

Quadruped

Hexapod

Cross-Embodiment Learning

We train policies using a single model architecture capable of handling diverse observation and action spaces on different random subsets of embodiments to uncover embodiment scaling laws.

Embodiment Scaling Laws

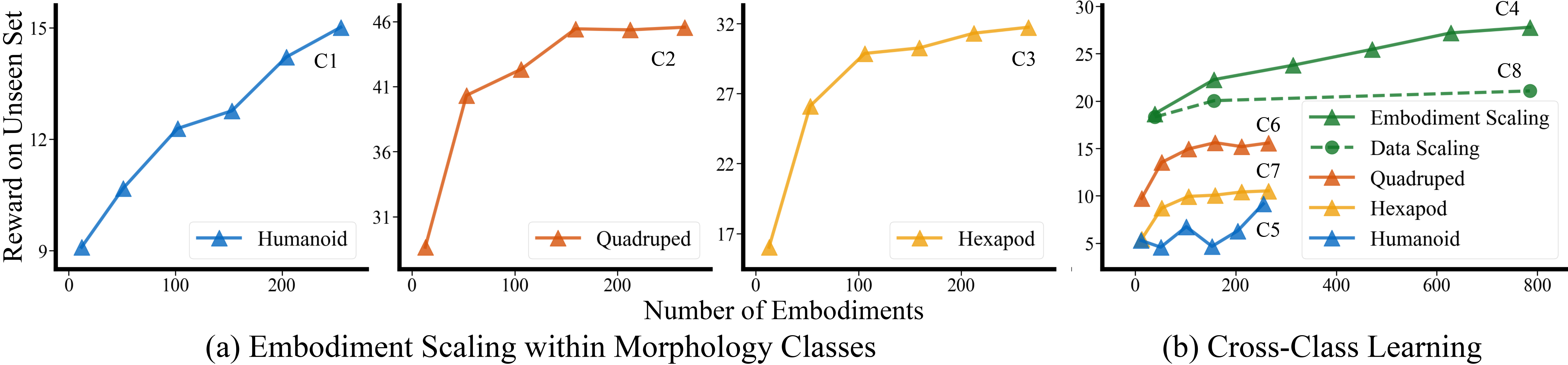

Training generalist locomotion policies on subsets of GENBOT-1K shows that generalization to unseen robots improves steadily as the number of training embodiments increases.

- More training embodiments → better generalization to unseen embodiments (C1–C4)

- Harder embodiments require more embodiments to saturate generalization (C1 vs C2–C3)

- Cross-morphology training improves generalization (C4 vs C5–C7)

- Embodiment scaling >> pure data scaling for embodiment generalization (C4 vs C8)

Qualitative Results in Simulation

A single policy controls diverse morphologies in simulation, both seen and novel.Sim-to-Real and Cross-Embodiment Transfer

Our best learned policy demonstrates both sim-to-real and cross-embodiment transferability. All results shown below are using one single policy trained in simulation.

Unitree Go2 with Varying Joint Limits

100% (all knees)

60% (right rear knee)

20% (right rear knee)

60% (front left knee)

40% (front left knee)

20% (front left knee)

Unitree Go2 in Varying Environments

Gravel

Grass

Grass

Pavement

Pavement

Cobblestone

Unitree H1 in Varying Directions

Forward

Backward

Sideward

Unitree H1 under External Perturbations

Pushes

BibTeX

@article{ai2025towards,

title={Towards Embodiment Scaling Laws in Robot Locomotion},

author={Ai, Bo and Dai, Liu and Bohlinger, Nico and Li, Dichen and Mu, Tongzhou and Wu, Zhanxin and Fay, K and Christensen, Henrik I and Peters, Jan and Su, Hao},

journal={Conference on Robot Learning (CoRL)},

url={https://arxiv.org/abs/2505.05753},

year={2025}

}This website was inspired by Kevin Zakka's and builds on Nico Bohlinger's and Bo Ai's.

Paper

Talk

Post

Code